I wrote a piece recently about Copilot+ Recall, a new Microsoft Windows 11 feature which — in the words of Microsoft CEO Satya Nadella- takes “screenshots” of your PC constantly, and makes it into an instantly searchable database of everything you’ve ever seen. As he says, it is photographic memory of your PC life.

I got ahold of the Copilot+ software and got it working on a system without an NPU about a week ago, and I’ve been exploring how this works in practice, so we’ll have a look into it that shortly. First, I want to look at how this feature was received as I think it is important to understand the context.

The overwhelmingly negative reaction has probably taken Microsoft leadership by surprise. For almost everybody else, it won’t have. This was like watching Microsoft become an Apple Mac marketing department.

At a surface level, it is great if you are a manager at a company with too much to do and too little time as you can instantly search what you were doing about a subject a month ago.

In practice, that audience’s needs are a very small (tiny, in fact) portion of Windows userbase — and frankly talking about screenshotting the things people in the real world, not executive world, is basically like punching customers in the face. The echo chamber effect inside Microsoft is real here, and oh boy… just oh boy. It’s a rare misfire, I think.

I think it’s an interesting entirely, really optional feature with a niche initial user base that would require incredibly careful communication, cybersecurity, engineering and implementation. Copilot+ Recall doesn’t have these. The work hasn’t been done properly to package it together, clearly.

A lot of Windows users just want their PCs so they can play games, watch porn, and live their lives as human beings who make mistakes.. that they don’t always want to remember, and the idea other people with access to the device could see a photographic memory is.. very scary to a great many people on a deeply personal level. Windows is a personal experience. This shatters that belief.

I think they are probably going to set fire to the entire Copilot brand due to how poorly this has been implemented and rolled out. It’s an act of self harm at Microsoft in the name of AI, and by proxy real customer harm.

More importantly, at the time I pointed out this fundamentally breaks the promise of security in Windows.

I’d now like to detail why. Strap in — this is crazy.

I’m going to structure this as a Q&A with myself now, based on comments online, as it’s really interesting seeing how some people handwave the issues away.

Q. The data is processed entirely locally on your laptop, right?

A. Yes! They made some smart decisions here, there’s a whole subsystem of Azure AI etc code that process on the edge.

Q. Cool, so hackers and malware can’t access it, right?

A. No, they can.

Q. But it’s encrypted.

A. When you’re logged into a PC and run software, things are decrypted for you. Encryption at rest only helps if somebody comes to your house and physically steals your laptop — that isn’t what criminal hackers do.

For example, InfoStealer trojans, which automatically steal usernames and passwords, are a major problem for well over a decade — now these can just be easily modified to support Recall.

Q. But the BBC said data cannot be accessed remotely by hackers.

A. They were quoting Microsoft, but this is wrong. Data can be accessed remotely.

This is what the journalist was told for some reason:

Q. Microsoft say only that user can access the data.

A. This isn’t true, I can demonstrate another user account on the same device accessing the database.

Q. So how does it work?

A. Every few seconds, screenshots are taken. These are automatically OCR’d by Azure AI, running on your device, and written into an SQLite database in the user’s folder.

This database file has a record of everything you’ve ever viewed on your PC in plain text. OCR is a process of looking an image, and extracting the letters.

Q. What does the database look like?

A:

https://cdn.embedly.com/widgets/media.html?type=text%2Fhtml&key=a19fcc184b9711e1b4764040d3dc5c07&schema=twitter&url=https%3A//x.com/GossiTheDog/status/1796218726808748367&image=

Q. How do you obtain the database files?

A. They’re just files in AppData, in the new CoreAIPlatform folder.

Q. But it’s highly encrypted and nobody can access them, right?!

A. Here’s a few second video of two Microsoft engineers accessing the folder:

https://cdn.embedly.com/widgets/media.html?src=https%3A%2F%2Fcyberplace.social%2F%40GossiTheDog%2F112535509953161486%2Fembed&display_name=mastodon&url=https%3A%2F%2Fcyberplace.social%2F%40GossiTheDog%2F112535509953161486&image=https%3A%2F%2Fcyberplace.social%2Fsystem%2Fmedia_attachments%2Ffiles%2F112%2F535%2F509%2F719%2F447%2F038%2Fsmall%2F7352074f678f6dec.png&key=a19fcc184b9711e1b4764040d3dc5c07&type=text%2Fhtml&schema=mastodon

Q. …But, normal users don’t run as admins!

A. According to Microsoft’s own website, in their Recall rollout page, they do:

In fact, you don’t even need to be an admin to read the database — more on that in a later blog.

Q. But a UAC prompt appeared in that video, that’s a security boundary.

A. According to Microsoft’s own website (and MSRC), UAC is not a security boundary:

Q. So… where is the security here?

A. They have tried to do a bunch of things but none of it actually works properly in the real world due to gaps you can drive a plane through.

Q. Does it automatically not screenshot and OCR things like financial information?

A. No:

Q. How large is the database?

A. It compresses well, several days working is around ~90kb. You can exfiltrate several months of documents and key presses in the space of a few seconds with an average broadband connection.

Q. How fast is search?

On device, really fast.

Q. Have you exfiltrated your own Recall database?

A. Yes. I have automated exfiltration, and made a website where you can upload a database and instantly search it.

I am deliberately holding back technical details until Microsoft ship the feature as I want to give them time to do something.

I actually have a whole bunch of things to show and think the wider cyber community will have so much fun with this when generally available.. but I also think that’s really sad, as real world harm will ensue.

Q. What kind of things are in the database?

A. Everything a user has ever seen, ordered by application. Every bit of text the user has seen, with some minor exceptions (e.g. Microsoft Edge InPrivate mode is excluded, but Google Chrome isn’t).

Every user interaction, e.g. minimizing a window. There is an API for user activity, and third party apps can plug in to enrich data and also view store data.

It also stores all websites you visit, even if third party.

Q. If I delete an email/WhatsApp/Signal/Teams message, is it deleted from Recall?

A. No, it stays in the database indefinitely.

Q. Are auto deleting messages in messaging apps removed from Recall?

A. No, they’re scraped by Recall and available.

Q. But if a hacker gains access to run code on your PC, it’s already game over!

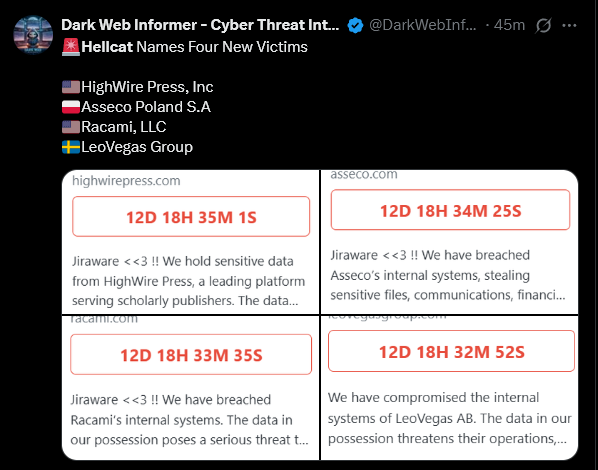

A. If you run something like an info stealer, at present they will automatically scrape things like credential stores. At scale, hackers scrape rather than touch every victim (because there are so many) and resell them in online marketplaces.

Recall enables threat actors to automate scraping everything you’ve ever looked at within seconds.

During testing this with an off the shelf infostealer, I used Microsoft Defender for Endpoint — which detected the off the shelve infostealer — but by the time the automated remediation kicked in (which took over ten minutes) my Recall data was already long gone.

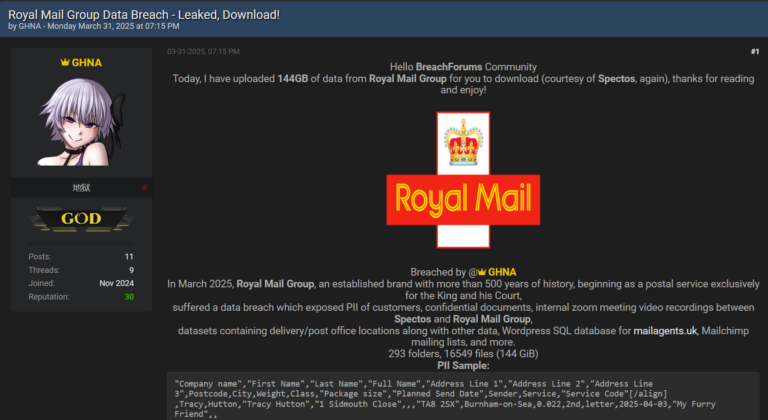

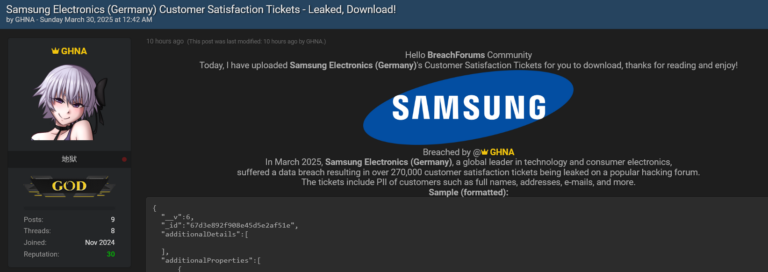

Q. Does this enable mass data breaches of website?

A. Yes. The next time you see a major data breach where customer data is clearly visible in the breach, you’re going to presume company who processes the data are at fault, right?

But if people have used a Windows device with Recall to access the service/app/whatever, hackers can see everything and assemble data dumps without the company who runs the service even being aware. The data is already consistently structured in the Recall database for attackers.

So prepare for AI powered super breaches. Currently credential marketplaces exist where you can buy stolen passwords — soon, you will be able to buy stolen customer data from insurance companies etc as the entire code to do this has been preinstalled and enabled on Windows by Microsoft.

Q. Did Microsoft mislead the BBC about the security of Copilot?

A. Yes.

Q. Have Microsoft mislead customers about the security of Copilot?

A. Yes. For example, they describe it as an optional experience — but it is enabled by default and people can optionally disable it. That’s wordsmithing.

https://cdn.embedly.com/widgets/media.html?type=text%2Fhtml&key=a19fcc184b9711e1b4764040d3dc5c07&schema=twitter&url=https%3A//x.com/tomwarren/status/1796681578984182066%3Fs%3D46&image=

Microsoft’s CEO referred to “screenshots” in an interview about the product, but the product itself only refers to “snapshots” — a snapshot is actually a screenshot. It’s again wordsmithing for whatever reason. Microsoft just need to be super clear about what this is, so customers can make an informed choice.

Q. Recall only applies to 1 hardware device!

A. That isn’t true. There are currently 10 Copilot+ devices available to order right now from every major manufacturer:

Additionally, Microsoft’s website say they are working on support for AMD and Intel chipsets. Recall is coming to Windows 11.

Q. How do I disable Recall?

A. In initial device setup for compatible Copilot+ devices out of the box, you have to click through options to disable Recall.

In enterprise, you have to turn off Recall as it is enabled by default:

WindowsAI Policy CSP – Windows Client Management

Learn more about the WindowsAI Area in Policy CSP.

The Group Policy object for this has apparently been renamed (the MS documentation is incorrect):

https://cdn.embedly.com/widgets/media.html?type=text%2Fhtml&key=a19fcc184b9711e1b4764040d3dc5c07&schema=twitter&url=https%3A//x.com/teroalhonen/status/1796285348747862132&image=

Q. What are the privacy implications? Isn’t this against GDPR?

A. I am not a privacy person or a legal person.

I will say that privacy people I’ve talked to are extremely worried about the impacts on households in domestic abuse situations and such.

Obviously, from a corporate point of view organisations should absolutely consider the risk of processing customer data like this — Microsoft won’t be held responsible as the data processor, as it is done at the edge on your devices — you are responsible here.

Q. Are Microsoft a big, evil company?

A. No, that’s insanely reductive. They’re super smart people, and sometimes super smart people make mistakes. What matters is what they do with knowledge of mistakes.

Q. Aren’t you the former employee who hates Microsoft?

A. No. I just wrote a blog this month praising them:

Breaking down Microsoft’s pivot to placing cybersecurity as a top priority

My thoughts on Microsoft’s last chance saloon moment on security.

Q. Is this really as harmful as you think?

A. Go to your parents house, your grandparents house etc and look at their Windows PC, look at the installed software in the past year, and try to use the device. Run some antivirus scans. There’s no way this implementation doesn’t end in tears — there’s a reason there’s a trillion dollar security industry, and that most problems revolve around malware and endpoints.

Q. What should Microsoft do?

A. In my opinion — they should recall Recall and rework it to be the feature it deserves to be, delivered at a later date. They also need to review the internal decision making that led to this situation, as this kind of thing should not happen.

Earlier this month, Microsoft’s CEO emailed all their staff saying “If you’re faced with the tradeoff between security and another priority, your answer is clear: Do security.”

We will find out if he was serious about that email.

They need to eat some humble pie and just take the hit now, or risk customer trust in their Copilot and security brands.

Frankly, few if any customers are going to cry about Recall not being immediate available — but they are absolutely going to be seriously concerned if Microsoft’s reaction is to do nothing, ship the product, slightly tinker or try to wordsmith around the problem in the media.